The Great Unlearning in the Age of AI

As AI reshapes the web, our grip on knowledge, trust, and shared reality is slipping.

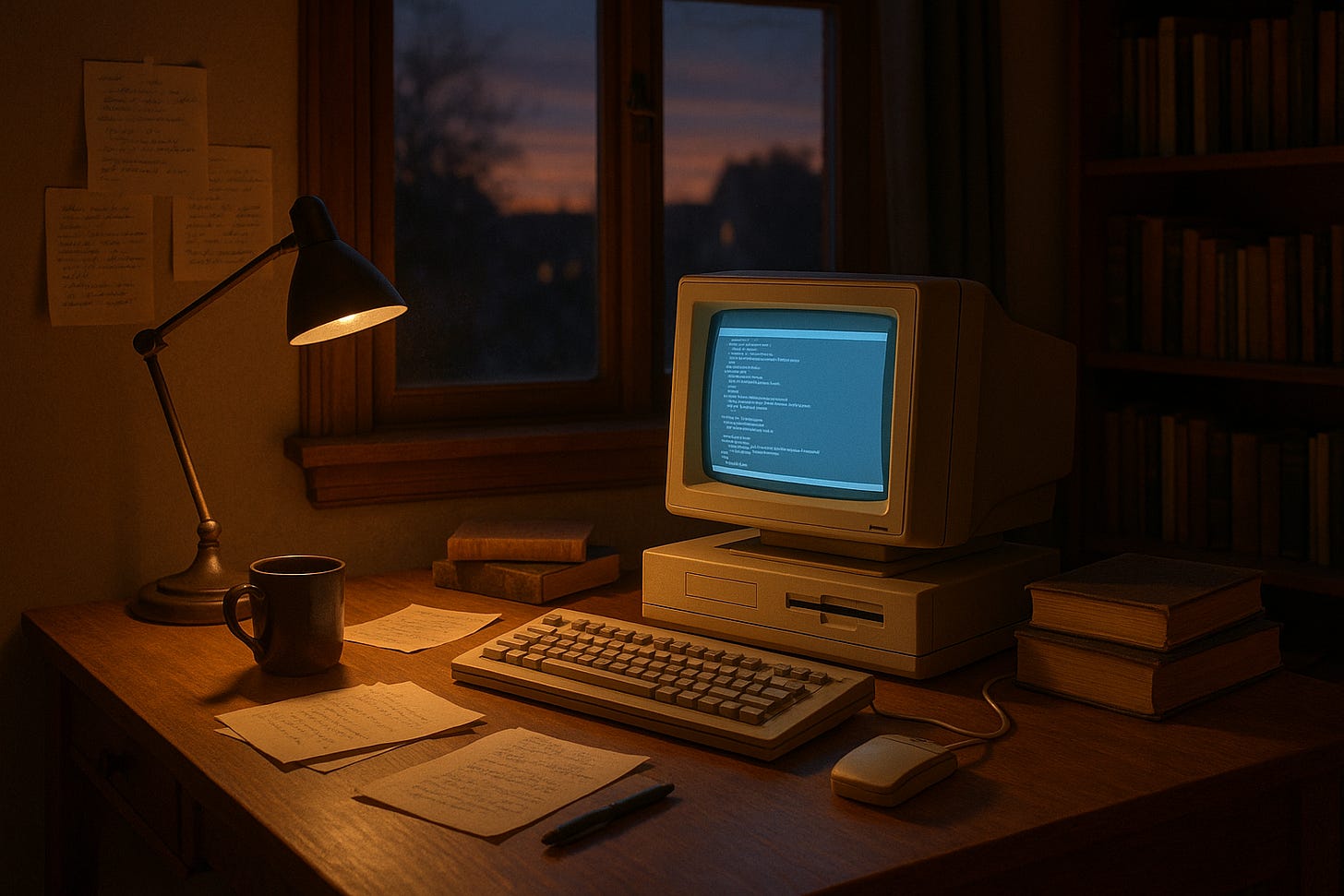

Am I the only one who thinks back fondly to the early days of the internet? Back when it felt like an endless library at your fingertips? Seriously, it was like someone had waved a magic wand. Global access to knowledge was suddenly available with just a dial-up connection, and it was glorious!

They called it the "information superhighway." It was a wellspring of learning, a place where knowledge was democratised. In an instant, you could dive into quantum physics, pick up a new language, trace your family tree, or double-check a fact on Google. It honestly felt like one of humanity’s most exhilarating leaps forward. We shared a hopeful (and somewhat misguided) optimism that we were entering a new age of enlightenment.

The Great Flood of Digital Slop

Fast forward a couple of decades, and that wellspring feels poisoned.

The clear, trustworthy information we once celebrated is now choked with noise, confusion, and blatant disinformation. Somewhere along the way, the internet's original promise — open, quick access to reliable knowledge — got buried under layers of clickbait, conspiracy, and algorithmically generated sludge.

And let’s be honest: we let it happen. We cheered on the arrival of AI, dazzled by its power to summarise, synthesise, and create. But AI doesn’t know what’s true. It doesn’t care. It’s not discerning. It scrapes indiscriminately, weaving together content from the brilliant to the utterly bogus, often with a tone of quiet authority convincing enough to fool the masses.

That seemingly trustworthy guide on repairing your 1978 Kawasaki? It might’ve been stitched together by a bot that’s never even seen a wrench.

The Unseen Cost of a Broken Promise

To me, this feels like a real loss. Something sacred feels eroded.

Our kids are growing up in a world where “just Google it” comes with a huge asterisk. They’re learning, far too early, that knowledge isn't always knowledge, and truth isn’t always true. That what ranks highest might not be what’s right, and what spreads fastest might be the most inflammatory, not necessarily the most factual.

The sheer volume of digital rubbish has reached a point where it’s exhausting to sift through the noise. And that exhaustion breeds disengagement. Why bother digging for the truth when the loudest headline wins?

It feels a bit like we opened Pandora’s Box, drunk on innovation, seduced by the speed and spectacle of it all. The “tech bros” gave us their shiny toys, and we didn’t ask enough hard questions about the tradeoffs. What happens when virality beats nuance? When algorithms replace editors. When speed and scale eclipse wisdom. I fear that we have crossed the tipping point, and the damage can’t be undone.

When the Information Well Runs Dry

So, what’s the real consequence of all this?

Could this herald the erosion of something much more fundamental: our shared reality?

Without reliable access to facts, how do we agree on anything? How do we make progress on climate change, public health, or even neighbourhood planning if everyone’s “facts” come from a different, equally unreliable digital swamp?

When every conversation starts from a different version of “truth,” we stop having constructive debates and start having complete breakdowns of communication.

Critical thinking falters, and discernment takes a back seat. And in the chaos, we gravitate toward what’s easiest, what confirms our fears or flatters our opinions. Content that’s often engineered for precisely that effect. The more we live in our own echo chambers, the more polarised we become.

A Call to (Human) Intelligence

This is a genuine human crisis; it’s about so much more than the technology gods we worship.

Because if we lose our grip on truth, we lose our grip on each other. Societies thrive on a foundation of shared understanding. Without that, everything becomes friction; conflict without thoughtful conversation, reaction without reflection.

The internet was supposed to be our greatest teacher. Instead, it’s becoming a hall of mirrors.

But maybe, just maybe, it’s not too late. Perhaps it’s time to stop blindly trusting the tools and start remembering who they were meant to serve. Technology and innovation, as brilliant and unstoppable as they are, still need us: our historical knowledge, our emotional intelligence, our ethics. More than ever in our recent history, we need editors, philosophers, and journalists. Teachers, artists, and thinkers.

Without that human depth of critical thinking and moral backbone, our shiny new tools do more than just fail us; they deceive us.

And while we are sleeping, we risk burning down the very source of knowledge we once built to enlighten the world.